Authors:

(1) Wen Wang, Zhejiang University, Hangzhou, China and Equal Contribution ([email protected]);

(2) Canyu Zhao, Zhejiang University, Hangzhou, China and Equal Contribution ([email protected]);

(3) Hao Chen, Zhejiang University, Hangzhou, China ([email protected]);

(4) Zhekai Chen, Zhejiang University, Hangzhou, China ([email protected]);

(5) Kecheng Zheng, Zhejiang University, Hangzhou, China ([email protected]);

(6) Chunhua Shen, Zhejiang University, Hangzhou, China ([email protected]).

Table of Links

4 EXPERIMENTS

4.1 Implementation Details

By default, we use GPT-4 [OpenAI 2023] as the LLM for the story to layout generation. The detailed prompts are shown in Appendix A.1. We use Stable Diffusion [Rombach et al. 2022] for text-to-image generation and leverage existing models on the civitai website as the base model for customized generation. For dense control, we use T2I-Adapter [Mou et al. 2023] keypoint control for human characters, and sketch control for non-human characters. In our AutoStory, the only part that requires training is the multi-subject customization process, which takes about 20 minutes for ED-LoRA training and 1 hour for gradient fusion on a single NVIDIA 3090 GPU, while other parts in our pipeline are completely training-free. With the multi-subject customized model prepared, our pipeline can generate plenty of results in minutes.

4.2 Main Results

Our AutoStory supports generating stories from user-input text only, or the user can additionally input images to specify the characters in the story. To validate the generality of our approach, we consider story visualization with different characters, scenes, and image styles. For each story, the text input for the LLM is just one sentence like “Write a short story about a dog and a cat”. For human characters, we additionally declare their names in the input, e.g., “Write a short story about 2 girls. Their names are Chisato and Fujiwara”. Each character is trained with 5 to 30 images, and the input characters are shown in Appendix A.1.

With Character Sample Inputs. As shown in the first two columns of Fig. 4, our approach is able to generate highquality, text-aligned, and identity-consistent story images. Small objects mentioned in the stories are also generated effectively, such as the camera in the third and fourth rows in (a). We attribute this text comprehension and planning capabilities of the LLM, which provides a reasonable image layout without ignoring the key information in the text. The features of the characters in each story are highly consistent, including the characters’ hairstyles, attire, and facial features. In addition, our approach is able to generate flexible and varied poses for each character, such as the half-squatting position in the third row in (a), and a high-five pose in the last row in (b). This is mainly due to our automatically generated dense control conditions, which guide the diffusion model to obtain fine-grained generation results.

With Only Text Inputs. In the case of text input only, we use the method in Sec. 3.4 to automatically generate training data for each character in the story. The generated character data is shown in Appendix A.1. As can be seen from the third and fourth columns in Fig. 4, we are still able to obtain high-quality story visualization results with highly consistent character identities even with only text inputs. The details of the characters in the story images are will-aligned to the text descriptions, e.g., the grandfather looks worried when the granddaughter gets lost, in the third and fourth rows in (c). While in the last row, they both look happy when they are reunited back home. The animal characters also show a variety of poses, for example, in (d), the cat presents varying poses of lying down, standing, or walking. This indicates that our method can generate consistent and high-quality story images of characters even without user input of character training images.

4.3 Comparison with Existing Methods

Compared Methods. Most previous story visualization methods are tailored for specific characters, scenes, and styles on curated datasets, and cannot be applied to generic story visualization. For this reason, we here mainly compare methods that can generalize, including (1) TaleCraft [Gong et al. 2023], a very competitive generic story visualization method; (2) Custom Diffusion [Kumari et al. 2022], a representative multi-concept customization method; (3) paint-by-example [Yang et al. 2022], which can fill characters into the story image to realize story visualization; (4) Make-A-Story [Rahman et al. 2022], a representative story visualization method in constrained story visualization scenarios, which is compared in the qualitative experiments. Since all existing methods rely on the user input character images for training, here we consider the same setting for a fair comparison.

Qulitative Comparison. In order to make a head-to-head comparison with the existing story visualization methods, we adopt the stories in TaleCraft and Make-A-Story, as shown in Fig. 5 and Fig. 6. It should be noted that since the character training images in TaleCraft are not available, we collected training images for each character in the story. Therefore, the input character images of our approach are slightly different from those used by TaleCraft. As shown in Fig. 5, Paint-byexample struggles to preserve the identities of characters. The girls in the generated images differ significantly from the user-provided image of the girl. Although Custom Diffusion performs slightly better in identity preservation, it sometimes generates images with obvious artifacts, such as the distorted

![Figure 5: Comparison with existing story visualization methods. The input characters are shown on the left. Note the results of TaleCrafter [Gong et al. 2023] are directly taken from their paper.](https://cdn.hackernoon.com/images/fWZa4tUiBGemnqQfBGgCPf9594N2-9s93zbk.png)

![Figure 6: Comparison with existing story visualization methods on the FlintstonesSV dataset. The input characters are shown on the left. Note the results of Make-A-Story [Rahman et al. 2022] and TaleCrafter [Gong et al. 2023] are directly taken from their paper.](https://cdn.hackernoon.com/images/fWZa4tUiBGemnqQfBGgCPf9594N2-ixa3zgf.png)

cat in the second and third images. TaleCraft achieves better image quality but still suffers from certain artifacts, e.g., the cat in the third image is distorted and one of the girl’s legs in the fourth image is missing. In contrast, our method is able to achieve superior performance in terms of identity preservation, text alignment, and generation quality.

Similarly, In Fig. 6, it can be seen that Make-A-Story generates story images in low quality, which is mainly due to the fact that it’s tailored for the FlintstonesSV [Maharana and Bansal 2021] dataset, and thus inherently limited by generation capacity. TaleCraft shows significant improvement in generation quality, but it has limited alignment to text, e.g., the missing suitcase in the first image, which we assume is due to the limited layout generation capacity of the discrete diffusion model for layout generation. In contrast, our method is able to text-aligned results, thanks to the LLM’s strong text comprehension and layout planning capabilities. Interestingly, there are significant differences in image style between our AutoStory and TaleCraft. We hypothesize that this is mainly caused by the difference in character data for training.

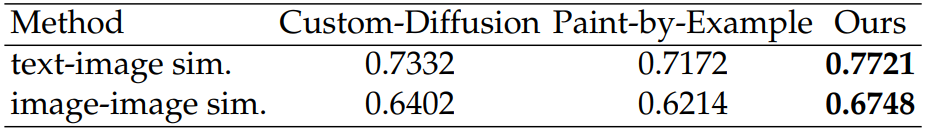

Quantitative Comparison. Following the literature [Gong et al. 2023], we consider two metrics to evaluate the generated results: (1) text-to-image similarity, which is measured by the

cosine similarity between the embeddings of texts and images in the CLIP feature space; (2) image-to-image similarity, which is measured by the cosine similarity between the average embedding of character images for training and the embedding of generated story images in CLIP image space. We conduct experiments on 10 stories with a total of 71 prompts and corresponding images. The results are shown in Table 1. It can be seen that our AutoStory outperforms existing methods by a notable margin in both text-to-image similarity and image-toimage similarity, which demonstrates the superiority of our method.

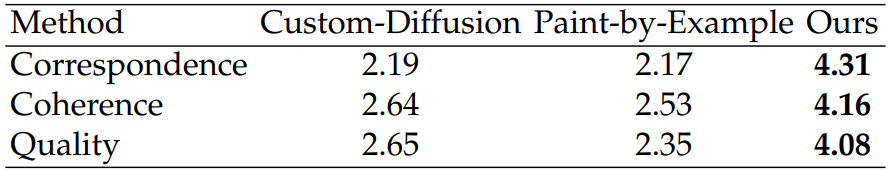

User Study. We conduct user studies on 10 stories, with an average of 7 prompts per story. During the study, 32 participants are asked to rate the story visualization results on three dimensions: (1) the alignment between the text and the images; (2) the identity-preservation of the characters in the images; and (3) the quality of the generated images. We asked users to score each set of story images on a Likert scale of 1-5. The results for each method are shown in Table 2. It can be seen that our AutoStory outperforms competing methods by a large margin in all three metrics, which indicates that our method is more favored by users.

4.4 Ablation Studies

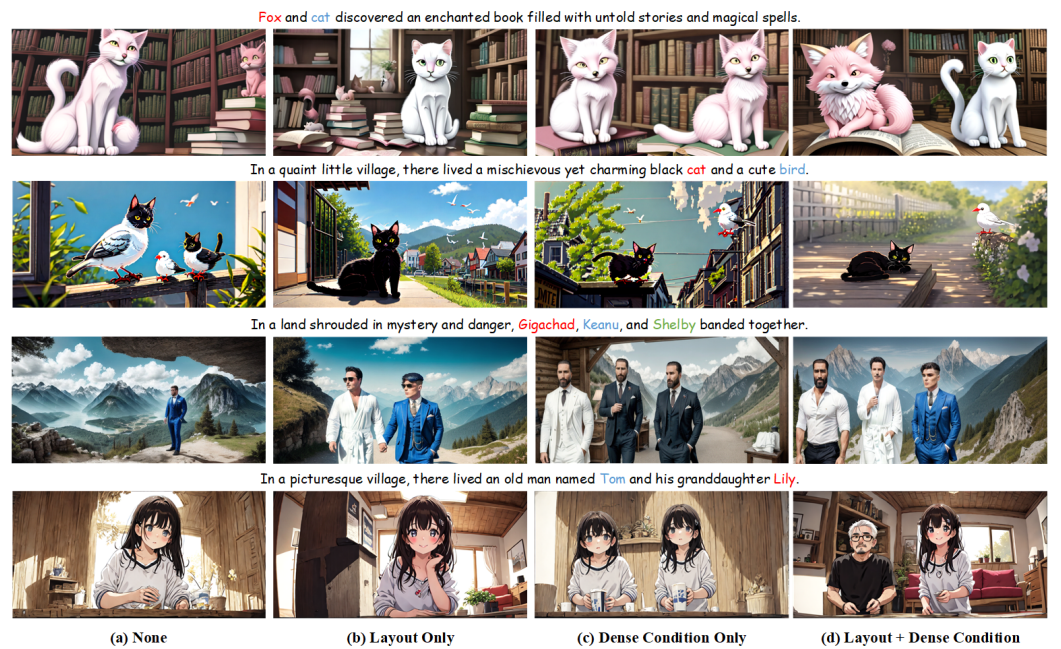

Ablations on Control Signals. We evaluate the necessity of both layout control and dense condition control in this section. The layout control refers to the bounding boxes indicating object locations and the corresponding local prompts, while the dense condition control refers to the composed condition, such as sketches and keypoints. The results are shown in Fig. 7, with the first two rows using sketches and the last two rows using keypoints as the dense condition. We have the following observations. Firstly, when no control conditions are used, the model generates images with missing objects and blends the properties of different objects, as shown in Fig. 7 (a). For example, only one character is generated in the third line, while the other two characters in the text are ignored. In the second line, there is a conflict between the attributes of a cat and a bird, and the generated animal has the head of a cat and the wings of a bird. This is mainly due to the fact that the generative model can not well-capture the textual input to generate images that have proper layouts and differentiate the attributes of the varying entities. Secondly, with the addition of the layout control, the concept conflict is significantly alleviated, mainly because the layout control helps to associate specific regions in the image with the corresponding local prompts. However, the problem of missing subjects in the images still exists, for example, only two characters are generated in the third row, while the character Gigachad is ignored in Fig. 7 (b). We suspect that this is due to the limited influence of the layout control on the feature updating in the model. Thirdly, in the case of only adding the dense control condition, the model is able to effectively generate all the entities mentioned in the text without omitting them, mainly because the dense control condition provides sufficient guidance to the model. However, the conceptual conflicts among the characters persist, for example, the attributes of the man in the fourth line are dominated by the attributes of the girl. This is mainly due to the fact that the character regions in the image are incorrectly and strongly associated with the other characters in the text. Lastly, our approach combines layout and dense conditional control can avoid object omissions and conceptual conflicts among characters, resulting in high-quality story images. We attribute this to the proper layout generated by the LLM and the effective conditioning paradigm during image generation.

Ablations on Designs in Multi-view Character Generation. To support the generation of story images from text inputs only, we propose an identity-consistent image generation approach to eliminate character-wise data collection, as detailed in Sec. 3.4. Here we ablate the design in this module and consider the following baseline approaches for comparison: (1) the pure-sd variant, which generates multiple character images directly using the Stable Diffusion model, without any additional operations. (2) the One-2-3-45 variant, which combines Stable Diffusion and One-2-3-45 for identity-consistent character image generation. Specifically, a single character image is first generated using Stable Diffusion, and then multi-view character images are obtained by applying One-2-3-45 to the single generated image. (3) the temporal-sd variant, which treats multiple character images as a video and leverages the extended self-attention in Sec. 3.4 for training-free consistency modeling. Firstly, pure-sd fails to obtain identity-consistent images as training data for a single character. As shown in the first column in Fig. 8, the color and the body shape of dogs in different images vary significantly. Secondly, the identities of the dogs in the images obtained using temporal-sd are consistent, as shown in the second column. This is because after adding extended self-attention, the latent features of several images can interact with each other, which substantially improves the consistency among images. However, the dogs in these images are all displayed in a positive smiling posture, indicating the lack of diversity. Thirdly, the images obtained using One-2-3-45 show strong diversity, but suffer from certain artifacts, such as the deformation of the dog’s head, as

shown in the third column. This is mainly because One-2-3-45 can not guarantee the consistency of the generated multi-view images. Lastly, our method is able to enhance diversity while ensuring the identity consistency of the generated character images. This is mainly due to the fact that we utilize the sketch of the images obtained by One-2-3-45 to guide the model for generating diverse character data, while using extended selfattention to ensure the consistency among images. In addition, the image priors cherished by Stable Diffusion can substantially mitigate the negative impact caused by the imperfect sketches obtained from images generated by One-2-3-45. As can be seen, the dogs generated by our method are free from distortions.

This paper is available on arxiv under CC BY-NC-ND 4.0 DEED license.