Authors:

(1) Wen Wang, Zhejiang University, Hangzhou, China and Equal Contribution ([email protected]);

(2) Canyu Zhao, Zhejiang University, Hangzhou, China and Equal Contribution ([email protected]);

(3) Hao Chen, Zhejiang University, Hangzhou, China ([email protected]);

(4) Zhekai Chen, Zhejiang University, Hangzhou, China ([email protected]);

(5) Kecheng Zheng, Zhejiang University, Hangzhou, China ([email protected]);

(6) Chunhua Shen, Zhejiang University, Hangzhou, China ([email protected]).

Table of Links

A APPENDIX

A.1 More Implementation Details

Detailed Prompts for the LLM. As described in Sec. 3 in the main text, we utilize LLMs to accomplish the story and layout generation. Specifically, we leverage the LLM for (1) generating the story, (2) dividing the story into panels, and (3) generating prompts and layout from the panels. In implementation, we further split the third step into two sub-steps, where we first convert the text of each panel into prompts suitable for generating the image, and then parse the prompts into the layout and local prompts. The detailed prompts and sampled LLM outputs are shown in Fig. 11.

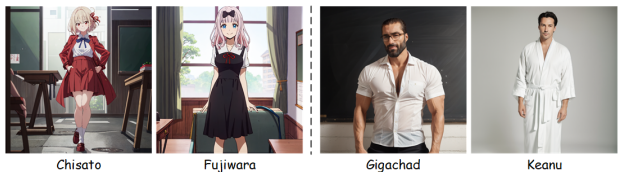

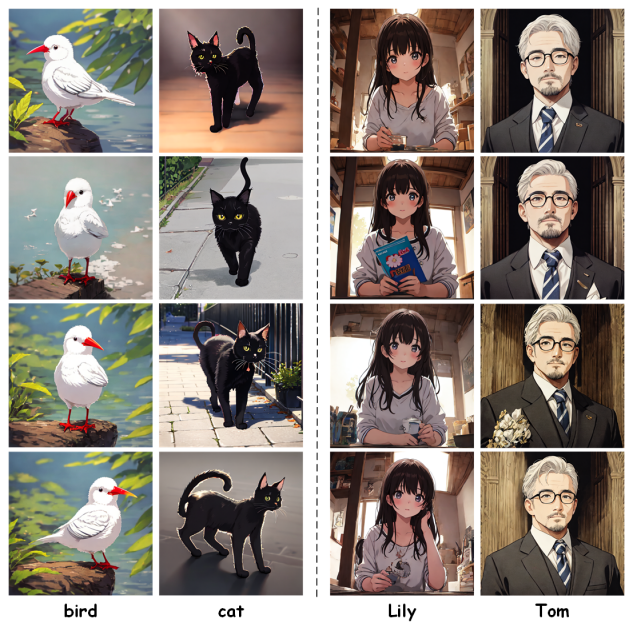

More Details on the Main Results. Fig. 4 shows the story image generation results of our method with varying characters, storylines, and image styles. Here, we present the character images used to train the customized model for each story. The story visualization results in the left two columns in Fig. 4 are obtained with the user-supplied character images. The corresponding characters are shown in Fig. 9. Differently, the story visualization results in the right two columns are obtained with only the story texts as inputs, and the characters are automatically generated by our method. The generated images for each character are shown in Fig. 10. It can be seen that the animal and human characters generated by our method are of high quality and consistent identities. The images of a single animal character show high diversity, with the orientation of the bird and the cat changing constantly from left to right. The human characters, however, are slightly less diverse, with a lower degree of variance in facial orientation. We believe that this is mainly due to the fact that the diffusion model is trained primarily on humans with frontal faces, making it difficult to generate side-facing images. Nonetheless, the character image data generated by our method can be effectively used for training customization models in story visualization, without introducing overfitting. It is worth mentioning that even though the character Tom in our generated data wears suits, we can generate images with Tom wearing a T-shirt after we specify that the character wears a T-shirt in the local prompt, as shown in the story visualization in Fig. 4 (d). Moreover, the characteristics of Tom are well-maintained, such as the shape of his face and the white hair. This indicates that the customized model trained with our generated data learns the character’s identity without overfitting.

A.2 Intermediate Results Visualization

To better understand our approach, in this section, we visualize the intermediate process of generating a single story image, as shown in Fig. 12 and Fig. 13. We first generate singlecharacter images based on the Local prompts generated by LLM, as shown in (a) and (b). The perception models, including Grounding-SAM, PidiNet, and HRNet, are then utilized to obtain the keypoints of human characters, or sketches of nonhuman characters, as shown in (c) and (d). Subsequently, the LLM-generated layout is utilized to compose the keypoints or sketches of individual subjects into a dense condition for

generating story images, as shown in (d). Finally based on the dense conditions, prompts, and layout, we generate the story image as shown in (f).

A.3 More Story Visualization Results

In Fig. 14 and Fig. 15, we showcase more story visualization results of our method. As can be seen, our AutoStory can produce high-quality, text-aligned, and identity-consistent story images, even when generating long stories.

This paper is available on arxiv under CC BY-NC-ND 4.0 DEED license.